If you are thinking about or never heard that building completely serverless APIs was possible? This post is for you. I’m going to compile a few lessons I’ve learned in the past 3-4 years while shipping a few production projects and dealing with no servers at all.

(\___/)

( ͡ ͡° ͜ ʖ ͡ ͡°)

\╭☞ \╭☞ Follow me on Twitter!

Be advised

Most of what I’m going to talk about here in this post is heavily dependent on AWS. AWS has been my cloud of choice for at least 8-10 years so If AWS isn’t what you are looking for you will be profoundly disappointed with this article. :’)

How does it work?

For all the things I’ve tried, I’ve found only two Libraries & Frameworks that can produce a “good enough” outcome from the point of maintenance.

API Gateway

Before moving into more detail, it’s important to know that at the moment, the only service available to truly serverless APIs is AWS API Gateway. So regardless of which library you choose they will always leverage the usage of API Gateway & Lambdas. API Gateway is the AWS attempt on the Sidecar gateway pattern.

The sidecar gateway pattern deploys the gateway as an ingress and egress proxy alongside a microservice. This enables services to speak directly to each other, with the sidecar proxy handling and routing both inbound and outbound communication.

In other words, API Gateway was made to be pretty much a wrapper on top of all your microservices. You can create a completely different API interface on top of your existing APIs, changing responses and resources, and all sorts of things. But there is one feature that is particularly powerful if you want to build serverless APIs which is the ability to proxy requests from an endpoint to a lambda function.

Apex Up

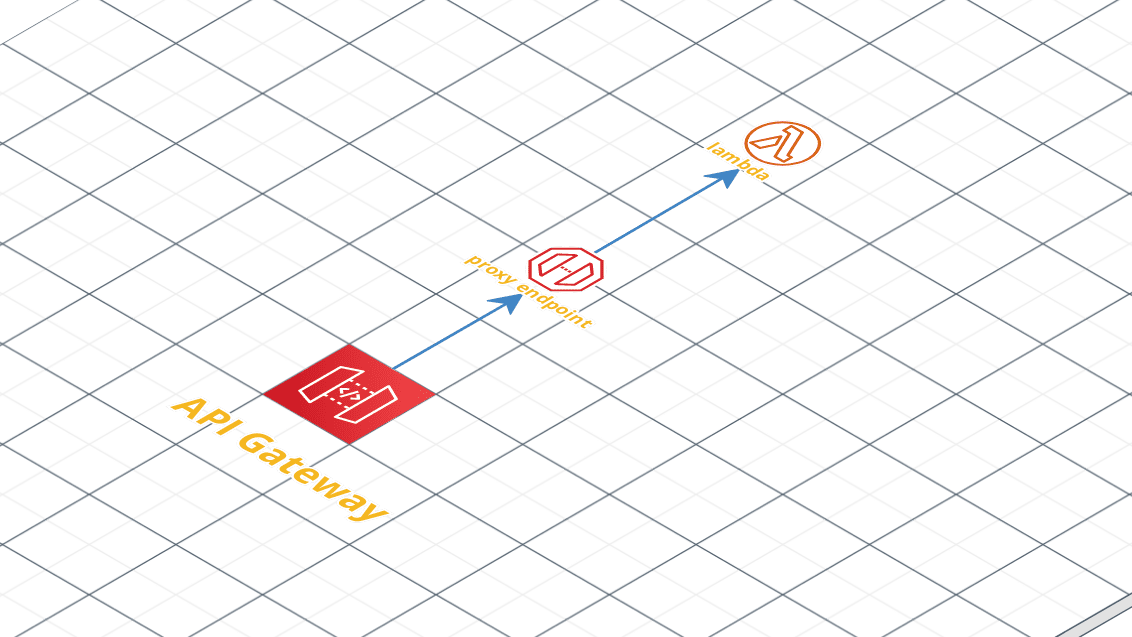

From the creator of Express, Mocha, Koa, and gazillion other open-source projects. It introduces a more maintainable design to the table for 90% of people. After deployment, your stack will look like this:

Up deploys an API Gateway interface for you, using a {proxy+} resource, add a network proxy in between, and forward all your incoming requests to your lambda function seemly. The good thing here is the “network proxy” in between, give us the ability to create an application that looks like an actual app and not a Lambda (no handlers, etc). For example, your API could still be a fully-fledged Express API and you wouldn’t even notice.

Serverless Framework

https://github.com/serverless/serverless

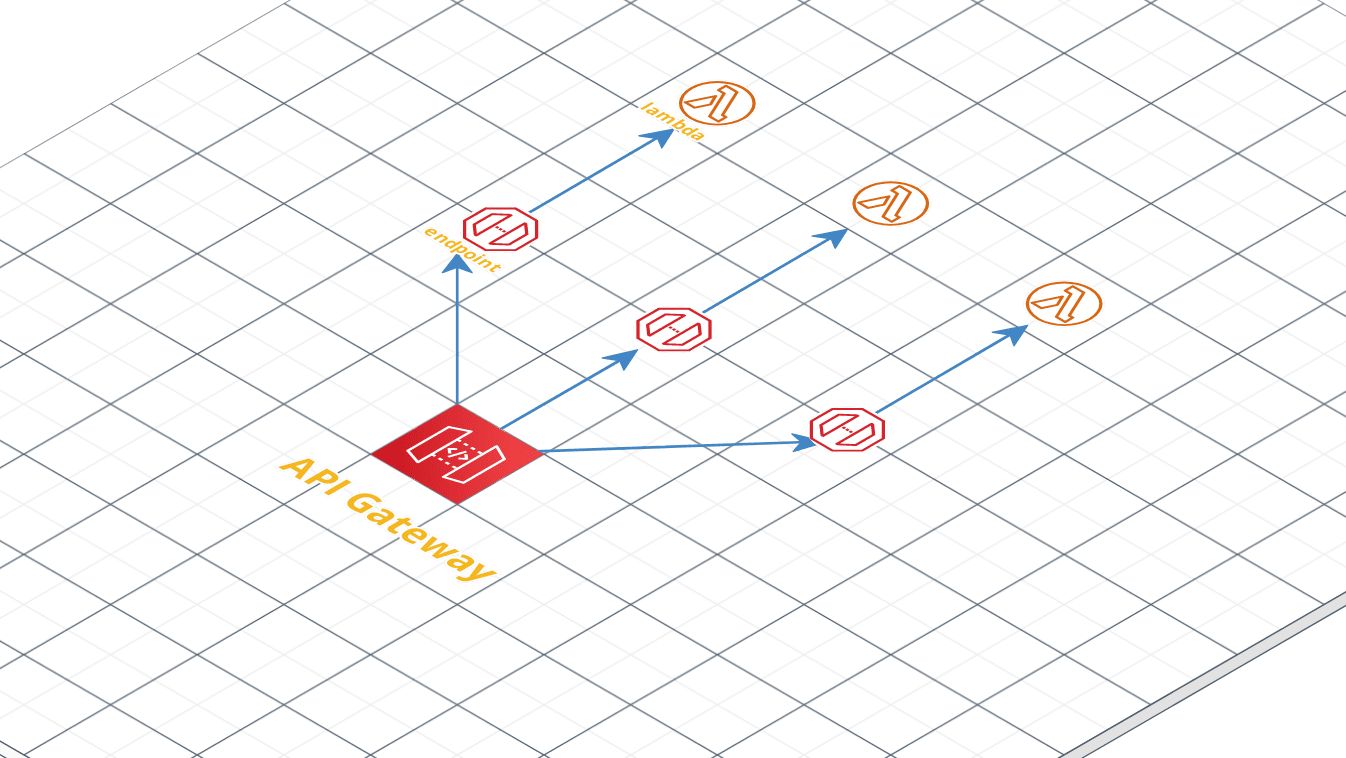

With the Serverless Framework, you can use a combination of plugins that can help you to create the API Gateway endpoints and proxy them to lambda functions, and your stack would look like this:

So you want to have a more “granular” control over the resource allocation, tech language behind each Lambda function, your best bet would be the Serverless Framework. It deploys one lambda function individually for each API endpoint you have. I personally don’t use it much as I prefer Up for most of my use cases.

Which one is better?

I use Up in most cases. Mostly because your code looks exactly the same as a regular application, it doesn’t look like you are writing a Lambda function so there is no development friction within your team, and if for any reason, you decide to move on to a containerized application you can do it pretty easily since you won’t have to change anything on the code itself so no heavy architectural change.

“If you are on a crossroads between two equality good options, pick the one that it is easier to change in the future.”

Someone smarted than me which I don’t remember

Serverless Framework is that bazooka we sometimes hear about. it looks like a Boeing dashboard with so many options and features. It’s great if you want more flexibility and you know what you are doing 🙂

When to use it?

- Since there aren’t servers involved, this type of stack is known for being able to handle crazy levels of concurrency & throughput so If your API has unpredictable request spikes which are harder to deal with auto-scaling or without having to throw money at the problem, this could be your solution.

- Background Job processing. I know it sucks but sometimes, jobs have to do API calls ¯\_(ツ)_/¯. If you have tons of jobs to process at a small period of time, and you don’t want to bring down your services, this stack can be useful.

- Side projects. It is extremely cost-effective and great to deploy your ideas in a matter of minutes. Who wants to pay 10-20$/month for something that you don’t even know if’s going to work, right? Paying per-request is a good morale booster if you still couldn’t find the courage to ship your idea because of monthly costs.

Be Aware

- If your API depends on native binaries, packaging your application might become tricky since you need to pre-compile all of them and add them to your

lib/folder before deploying it. In that case, unless you are experienced with compiling custom binaries… managing containers might be easier and unless your like pain and suffering. - Keep your APIs slim. Your bundle can’t exceed the 500MB mark in size. (unless you have binaries, this is pretty hard to achieve).

Common Issues (and How to Fix them)

Cold Starts

If you are not using this stack for a user-facing API, you might want to skip this one.

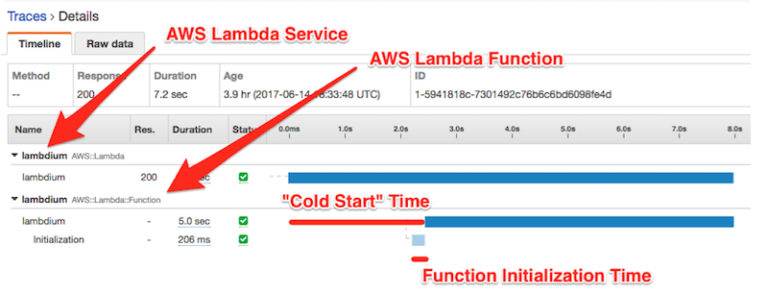

There is an inherited characteristic when you use Lambda functions which is what people call “cold starts“.

As concurrency increases, AWS keeps increasing the amount of “warmed” lambdas that are able to handle your traffic but not for too long if you don’t use it.

So if you have an API that doesn’t get used too often, without reserved concurrency you can sometimes experience an increase of response time which is a new lambda booting up to handle your current currency demand.

How to fix it?

If you are managing just lambda functions or using the serverless framework, you can fix it with “reserved concurrency”. It’s an option available on your lambda configuration and AWS will make sure all lambda calls go through “warmed” lambdas. Reserved concurrency is charged extra.

If you are managing APIs (using Up), this is where it gets tricky:

When Lambda allocates an instance of your function, the runtime loads your function’s code and runs initialization code that you define outside of the handler. If your code and dependencies are large, or you create SDK clients during initialization, this process can take some time.

This means that since the Up owns the handler, only the handler gets “warmed up” and the function doesn’t really initialize so you have a few options:

- If you have a monitoring tool like Apex Ping, you can configure it to ping your API and keep it warm for you.

- If you need a specific amount of concurrency, you can either write a code that concurrently “pings” your API every X minutes. It will keep X containers running for you and ready to handle the traffic. If you don’t want to do that… using Up Pro, you can configure the concurrency you need and it will keep it warm for you. Up Active Warming is billed normally without paying extra.

- If having cold starts is not an issue but you just want to reduce the cold start time, using a combination of reserved concurrency and increasing the amount of memory of your lambda can reduce your cold start in half or more.

Blowing up Database Connections

Lambdas are designed to handle one call at once, isolated, so no concurrency in between calls. It means that if you call a lambda function twice in parallel it will spawn two different lambdas and handle them independently. If you call it again it means you have two warmed lambda on the “pool” and one of them will be “re-used” without creating another one (no cold starts). It means that if your environment has crazy levels of concurrency like 1000 API calls at the same time it means that you might have 1000 clients connected to your database just to handle 1000 API calls. Not good. If you misconfigured your connection pool with like, 10 connections per lambda you would have 10k database connections at once. (╯°□°)╯︵ ┻━┻.

How to fix it?

Serverless Databases: If you can, use a serverless database like DynamoDB it will work just fine because you don’t keep active connections to your DynamoDB.

Amazon RDS Proxy: If a serverless database is not an option, AWS offers you an RDS Proxy which you can configure directly from the AWS console. https://aws.amazon.com/blogs/compute/using-amazon-rds-proxy-with-aws-lambda/. It means that this proxy will manage the connection pool for you without creating tons of new ones on your database as concurrency increases.

Performance Issues

One thing that isn’t obvious at first is: You can’t configure the amount of CPU allocated to your Lambda function it’s all about getting the right memory settings for your use-case.

When you scale up the amount of memory your lambda needs, it also increases the amount of CPU, networking performance, and so on. It means that your Lambda might be ok memory-wise, but could have its performance drastically improved by just increasing the amount of memory allocated to it.

If you think that the reason why you are having performance issues is code-related, you can set up X-Ray for your lambda function and identify the bottleneck.

Native Binaries

All Lambdas Runtimes are built on top of the Amazon Linux Images. This means that, if your project uses native binaries, once they get compiled on your local machine they might not be compatible with the Lambda Runtime which is based on the Amazon Linux AMIs.

If that’s your case, Docker is your friend. You can use a combination of Docker Compose + Amazon Linux images to build your dependencies before packaging and deploying your app.

Managing Secrets

At this moment, Lambda doesn’t natively support the injection of secrets as environment variables. If you are using Serverless Framework, all you can do at this point is adding them to Secrets Manager or Parameter Store and read them when your lambda boots up using any AWS SDK available. Make sure you do that outside of the function handler.

If you are using Up, you can just use the up env and it will be handled for you on a per-environment basis. (Under the hood, also managed via Secrets Manager).

Testing Locally

If you are using Up, it wouldn’t be a problem since you would just start your app normally. But if you are using Serverless Framework you can follow this in-depth tutorial from AWS teaching you how to simulate the lambda environment locally using SAM.

Wrap Up

As you can see, when used to solve the right problem, respecting the limitations of the environment, this is a very strong card to keep on your sleeve.

If you faced something different, comment bellow (づ ̄ ³ ̄)づ.

The fix for the common issues is a life saver! Thank You!

LikeLike